The Adaptive Organization – Leadership in the Age of Truth

At the beginning of this article series, I presented the claim that UX is broken. We examined how the linear model of the human being is a fiction and how artificial intelligence currently often only scales these errors. In the previous part, I introduced an alternative: a shift from lines to vectors and from narratives to Nowcast thinking.

Now it is time to ask the most difficult question: what does this mean for leadership? What happens to an organization that decides to face reality without the protection offered by stories?

The brain’s compulsion to build stories is also a mechanism for avoiding responsibility

The human brain does not tolerate randomness. From an evolutionary perspective, survival has depended on pattern recognition: if something repeats, it is worth reacting to. This creates strength, but also illusion. The same mechanism forces us to build a narrative even when reality consists only of overlapping signals, interruptions, and situational deviations.

In organizations, narrative is not merely an explanatory model – it is a tool for distributing and diluting responsibility. When we talk about customer journeys, phases, and touchpoints, we break reality into manageable parts. At the same time, we fragment responsibility. Suffering occurs at the level of the system as a whole, but blame is always assigned to a single point: the wrong phase, a poor interaction, a failed touchpoint.

Narrative makes dynamic suffering modular. No one is responsible for the whole, because the whole has been decomposed into a story. Leadership then does not confront reality itself, but the map it has constructed. We are not leading human states, but our own explanation of them.

The illusion of control and the moral lie of dashboards

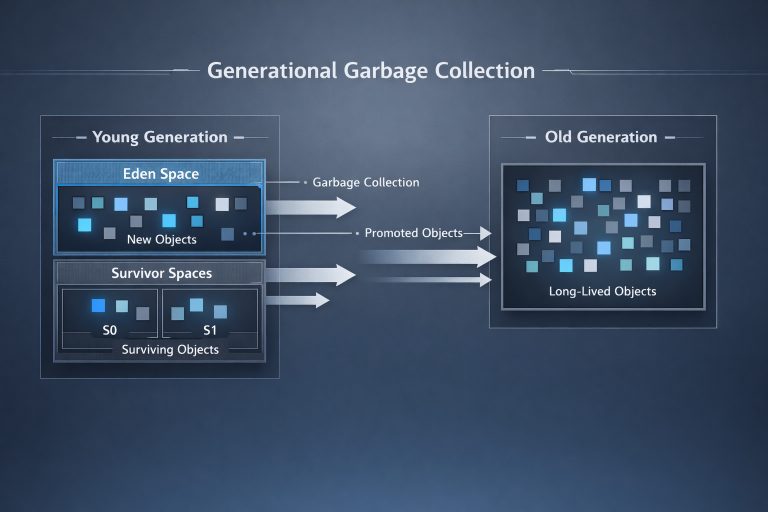

Why do organizations love static metrics such as NPS or CSAT scores? Not primarily because they tell the truth, but because they relieve responsibility. A number suggests that something has been measured. A dashboard creates the impression that someone is overseeing the whole.

But a dynamic state cannot be governed by a single number. When complex human reality is compressed into a green figure, a moral shift occurs: suffering becomes acceptable variance. We no longer ask why the system burdens people, but why the metric is not yet bad enough.

The sense of control arises precisely because the metric is simple. It is purchased at a price that is paid in invisibility.

Artificial intelligence and the moral choice: do you see deeper or explain better?

Artificial intelligence adds a new and dangerous layer to this structure. Language models make narratives infinitely scalable. They produce plausible explanations, polished summaries, and insight language that sounds like understanding.

At this point, leadership faces a moral choice that cannot be outsourced to technology:

Do you use AI to see deeper into signals, or do you use it to produce ever more convincing explanations for why your current strategy is correct?

The brain loves fluent language. That is why AI-generated narratives so easily feel like truth. But AI does not break the narrative bias – it can perfect it. Without a conscious decision to confront signals as they are, artificial intelligence becomes the most efficient instrument of self-deception in leadership.

The price of truth: signals do not support heroic stories

Nowcast thinking breaks this structure. It does not explain what happened or why a strategy succeeded. It tells us where the human being is right now. In a dynamic state, zt, there is no plot, no climax, and no hero.

This is difficult for leadership because it requires abandoning heroic leadership. If reality is a continuously changing state, the leader is not the protagonist of a story but a maintainer of balance. PowerPoint is no longer the central tool, because data may never settle into a neat narrative.

Leadership is not about reaching an endpoint, but about continuous response to tension, load, and change. It requires the ability to remain present in ambiguity without first justifying decisions through a story.

Restoring empathy: responsibility without explanation

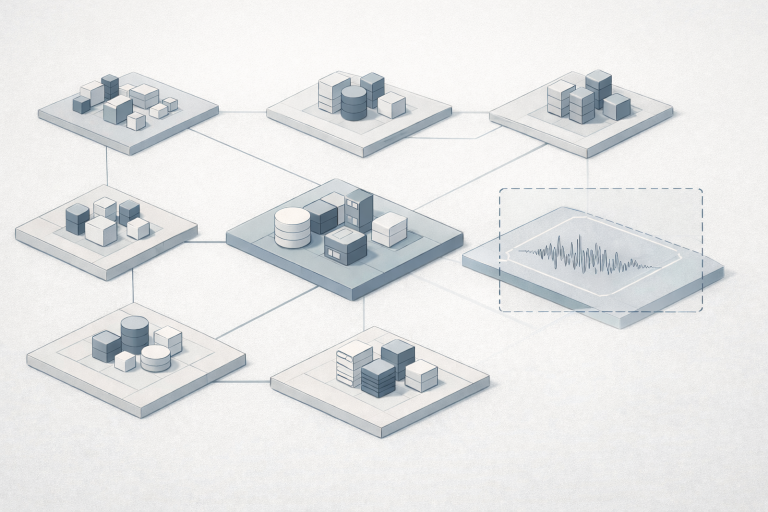

Paradoxically, it is precisely here that technology can restore humanity. When machines detect dynamic signals – load, rhythm changes, rising tension – what remains for humans is the part that cannot be automated: carrying responsibility without narrative protection.

No synthetic empathy, no explanation of why this happened, but a decision to change the conditions of the system because the signals indicate that it is necessary.

An adaptive organization is not the one with the best forecasting model. It is the organization that can tolerate the fact that truth never turns into a story.

UX is broken – and it forces leadership to change The UX field has narrowed itself in a self-destructive way into

The UX field has narrowed itself in a self-destructive way into the optimization of interfaces, even though the real issue is the rethinking of the entire logic of leadership. When we stop seeing humans as static users and begin to see them as dynamic states, the organization must become adaptive.

The final question is not how we fix UX metrics. The question is:

What happens to an organization that can no longer hide behind numbers, models, and the stories constructed by the human brain?

We do not need better forecasts.

We need better presence in front of signals.

When reality is a dynamic state rather than a narrative, responsibility cannot be distributed across phases. It is always collective responsibility. That is why adaptive leadership is not the delegation of tasks, but the governance of a shared state.

This journey, its theory, and its practical realization are explored further in the book CX Is Broken: Escaping the Illusion of Control, to be published in January 2026.